Exciting news! This week, lab-member Kacy Hatfield [Media Arts and Sciences MS Student co-advised by Dr. Pavan Turaga] is attending CVPR2024 and presenting her work on “wasp deep fakes” at the 2024 CV4Animals workshop. This is our first formal collaboration with The Tibbetts Lab at the University of Michigan.

Kacy Hatfield

MS Student

Media Arts and Sciences

(co-advised with Dr. Pavan Turaga, GML)

The Tibbetts lab specializes in understanding the role of individual recognition (IR) in animal behavior, particularly when it comes to forming and maintaining social hierarchies. Their chosen model organisms for studying IR are New World paper wasps of the genus Polistes – particularly Polistes fucsatus and Polistes dominula. Both of these paper wasps have been shown to be sensitive to the facial markings on other individuals, where these markings can predict outcomes from aggressive interactions. In the case of Polistes dominula, features of the face seem to be honest signals of the resource holding potential (RHP) of the wasp, and so wasps gain a lot of information from decoding these honest facial signals. However, the large variation across P. fuscatus faces does not have any consistent correlation with RHP and instead those wasps seem to remember idiosyncratic, holistic facial patterns of wasps they’ve observed in interactions with other wasps and will modify their behavior based on the outcome of those observed interactions. In other words, P. fuscatus eavesdrops on wasps so they can best respond to those wasps when they encounter them later and recognize them by their unique facial patterns. Check out some examples of these different faces below (via Elizabeth Tibbetts).

Normally when researchers like Tibbetts experiment with IR, they physically alter the faces of real wasps (e.g., by adding paint) either before or after those wasps engage in some social interaction with others. However, other work with social insects has shown that insects will respond to videos of other insects using the same behavioral patterns that they would use to a real, live insect. Consequently, there is potential that generative models that can produce synthetic videos of seemingly realistic wasps might allow for a much wider range of behavioral manipulations.

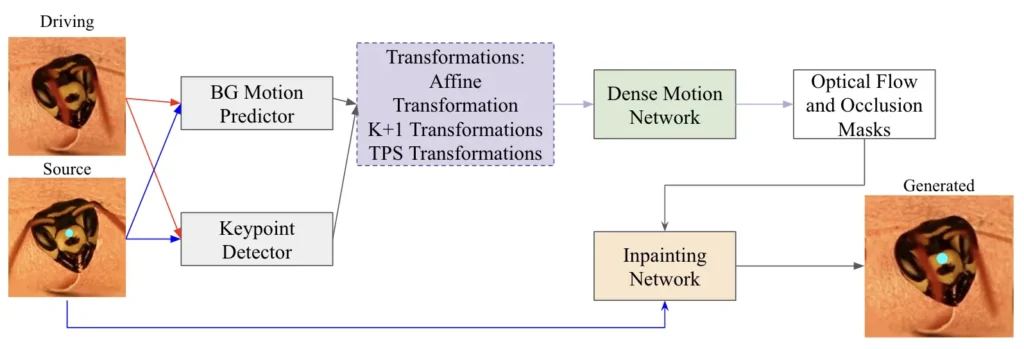

So, in Kacy’s CV4Animals paper, she evaluated how well the thin-plate spline motion (TPSM) model could be animate a static image of a wasp face with the motion from a source video of another wasp face. Her basic pipeline is shown below.

This approach led to outputs like one one shown below, which shows a frame of a driving video (with the behavior to be “transferred” to another wasp) and a frame of a generated video (showing the target wasp performing the behavior). To keep things simple to evaluate the practicality of using TPSM for this, our image of the face of a wasp to be animated was just a sample frame from the driving video augmented with a blue dot. So, a successful test would generate a video with the same motion as the driving video but with a blue dot fixed to the right location on the face as it moves.

You can view video outputs from this process at the GitHub page for the paper. As you can see, the generated videos that attempt to track wasp motion are far less impressive than videos generated that attempt to track human motions. We think this is, in part, due to the complications of having antenna that frequently obscure the face (which is a challenge that TPSM typically does not have to handle in conventional tests of its performance). So, we are working on: (a) testing other kinds of models, (b) improving the training cases for the TPSM instance we’re using, and (c) combining TPSM with more sophisticated pre-processing pipelines that help the TPSM focus on the face itself.

For more details, check out:

- The CV4Animals extended abstract

- The GitHub page for the paper

- The poster that Kacy presented during CV4Animals at CVPR2024

We’re excited to continue this work! We believe this is the first time generative models have been used to study IR in an invertebrate. Other groups have used computer graphics techniques to render 3D models to test hypotheses about perception and behavior in other animals; we hope that these generative AI models can add even more tools for testing more complex hypotheses underlying social behavior and perception.